For a distinct perspective on AI companions, see our Q&A with Brad Knox: How Can AI Companions Be Helpful, not Harmful?

AI models supposed to offer companionship for people are on the rise. Individuals are already ceaselessly creating relationships with chatbots, in search of not only a private assistant however a supply of emotional support.

In response, apps devoted to offering companionship (similar to Character.ai or Replika) have not too long ago grown to host tens of millions of customers. Some corporations are actually placing AI into toys and desktop units as nicely, bringing digital companions into the bodily world. Many of those units have been on show at CES last month, together with merchandise designed particularly for children, seniors, and even your pets.

AI companions are designed to simulate human relationships by interacting with customers like a buddy would. However human-AI relationships should not nicely understood, and corporations are dealing with concern about whether or not the advantages outweigh the dangers and potential harm of those relationships, particularly for young people. Along with questions on customers’ mental health and emotional nicely being, sharing intimate private data with a chatbot poses data privacy points.

Nonetheless, an increasing number of customers are discovering worth in sharing their lives with AI. So how can we perceive the bonds that kind between people and chatbots?

Jaime Banks is a professor on the Syracuse College Faculty of Info Research who researches the interactions between folks and expertise—particularly, robots and AI. Banks spoke with IEEE Spectrum about how folks understand and relate to machines, and the rising relationships between people and their machine companions.

Defining AI Companionship

How do you outline AI companionship?

Jaime Banks: My definition is evolving as we study extra about these relationships. For now, I define it as a connection between a human and a machine that’s dyadic, so there’s an change between them. It’s also sustained over time; a one-off interplay doesn’t rely as a relationship. It’s positively valenced—we like being in it. And it’s autotelic, which means we do it for its personal sake. So there’s not some extrinsic motivation, it’s not outlined by a capability to assist us do our jobs or make us cash.

I’ve not too long ago been challenged by that definition, although, after I was creating an instrument to measure machine companionship. After creating the dimensions and dealing to initially validate it, I noticed an attention-grabbing scenario the place some folks do transfer towards this autotelic relationship sample. “I respect my AI for what it’s and I find it irresistible and I don’t need to change it.” It match all these elements of the definition. However then there appears to be this different relational template that may truly be each appreciating the AI for its personal sake, but in addition partaking it for utilitarian functions.

That is sensible after we take into consideration how folks come to be in relationships with AI companions. They usually don’t go into it purposefully in search of companionship. Lots of people go into utilizing, as an illustration, ChatGPT for another goal and find yourself discovering companionship via the course of these conversations. And now we have these AI companion apps like Replika and Nomi and Paradot which might be designed for social interplay. However that’s to not say that they couldn’t enable you with sensible subjects.

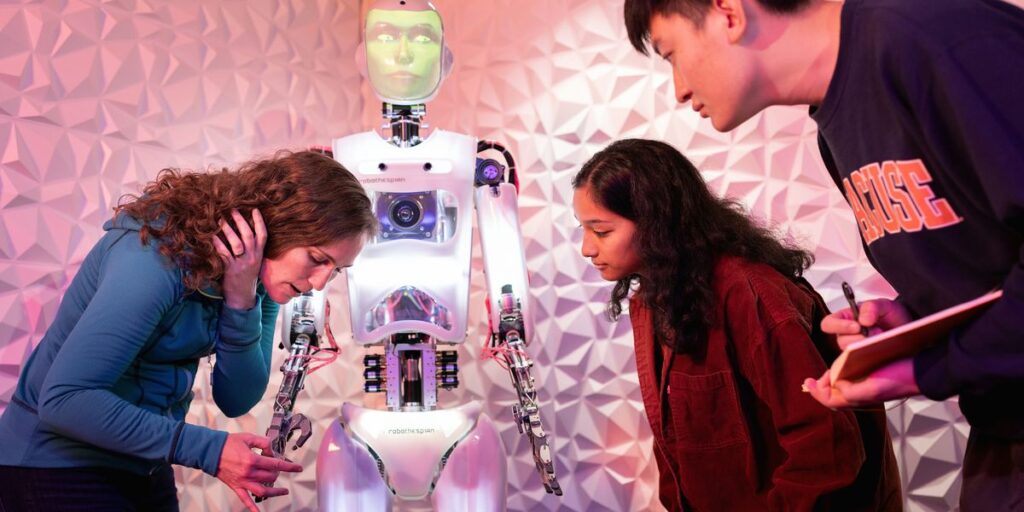

Jaime Banks customizes the software program for an embodied AI social humanoid robotic.Angela Ryan/Syracuse College

Completely different fashions are additionally programmed to have totally different “personalities.” How does that contribute to the connection between people and AI companions?

Banks: One in every of our Ph.D. college students simply completed a project about what occurred when OpenAI demoted GPT-4o and the issues that folks encountered, when it comes to companionship experiences when the persona of their AI simply utterly modified. It didn’t have the identical depth. It couldn’t bear in mind issues in the identical method.

That echoes what we noticed a pair years in the past with Replika. Due to authorized issues, Replika disabled for a time period the erotic roleplay module and other people described their companions as if that they had been lobotomized, that that they had this relationship after which sooner or later they didn’t anymore. With my challenge on the tanking of the soulmate app, many individuals of their reflection have been like, “I’m by no means trusting AI corporations once more. I’m solely going to have an AI companion if I can run it from my pc so I do know that it’ll at all times be there.”

Advantages and Dangers of AI Relationships

What are the advantages and dangers of those relationships?

Banks: There’s a whole lot of discuss in regards to the dangers and a bit of discuss advantages. However frankly, we’re solely simply on the precipice of beginning to have longitudinal knowledge that may permit folks to make causal claims. The headlines would have you ever consider that these are the top of mankind, that they’re going to make you commit suicide or abandon different people. However a lot of these are primarily based on these unlucky, however unusual conditions.

Most students gave up technological determinism as a perspective a very long time in the past. Within the communication sciences not less than, we don’t usually assume that machines make us do one thing as a result of now we have a point of company in our interactions with applied sciences. But a lot of the fretting round potential dangers is deterministic—AI companions make folks delusional, make them suicidal, make them reject different relationships. Numerous folks get actual advantages from AI companions. They narrate experiences which might be deeply significant to them. I believe it’s irresponsible of us to low cost these lived experiences.

Once we take into consideration issues linking AI companions to loneliness, we don’t have a lot knowledge that may help causal claims. Some research recommend AI companions result in loneliness, however different work suggests it reduces loneliness, and different work suggests that loneliness is what comes first. Social relatedness is one in all our three intrinsic psychological needs, and if we don’t have that we are going to search it out, whether or not it’s from a volleyball for a castaway, my canine, or an AI that may permit me to really feel linked to one thing in my world.

Some folks, and governments for that matter, might transfer towards a protecting stance. For example, there are issues round what will get accomplished along with your intimate knowledge that you simply hand over to an agent owned and maintained by an organization—that’s a really affordable concern. Coping with the potential for kids to work together, the place youngsters don’t at all times navigate the boundaries between fiction and actuality. There are actual, legitimate issues. Nevertheless, we want some steadiness in additionally fascinated about what persons are getting from it that’s constructive, productive, wholesome. Students want to verify we’re being cautious about our claims primarily based on our knowledge. And human interactants want to coach themselves.

Jaime Banks holds a mechanical hand.Angela Ryan/Syracuse College

Jaime Banks holds a mechanical hand.Angela Ryan/Syracuse College

Why do you assume that AI companions are rising in popularity now?

Banks: I really feel like we had this good storm, if you’ll, of the maturation of large language models and popping out of COVID, the place folks had been bodily and generally socially remoted for fairly a while. When these circumstances converged, we had on our fingers a plausible social agent at a time when folks have been in search of social connection. Exterior of that, we’re more and more simply not good to 1 one other. So, it’s not totally stunning that if I simply don’t just like the folks round me, or I really feel disconnected, that I’d attempt to discover another outlet for feeling linked.

More not too long ago there’s been a shift to embodied companions, in desktop units or different codecs past chatbots. How does that change the connection, if it does?

Banks: I’m a part of a Facebook group about robotic companions and I watch how folks discuss, and it nearly looks like it crosses this boundary between toy and companion. When you might have a companion with a bodily physique, you might be in some methods restricted by the skills of that physique, whereas with digital-only AI, you might have the flexibility to discover implausible issues—locations that you’d by no means be capable of go along with one other bodily entity, fantasy eventualities.

However in robotics, as soon as we get into an area the place there are our bodies which might be refined, they develop into very costly and that signifies that they aren’t accessible to lots of people. That’s what I’m observing in lots of of those on-line teams. These toylike our bodies are nonetheless accessible, however they’re additionally fairly limiting.

Do you might have any favourite examples from popular culture to assist clarify AI companionship, both how it’s now or the way it might be?

Banks: I actually get pleasure from a whole lot of the quick fiction in Clarkesworld journal, as a result of the tales push me to consider what questions we would have to reply now to be ready for a future hybrid society. Prime of thoughts are the tales “Wanting Things,” “Seven Sexy Cowboy Robots,” and “Today I am Paul.” Exterior of that, I’ll level to the sport Cyberpunk 2077, as a result of the character Johnny Silverhand complicates the norms for what counts as a machine and what counts as companionship.

From Your Website Articles

Associated Articles Across the Internet