If it feels as of late as if the whole lot in know-how is about AI, that’s as a result of it’s. And nowhere is that extra true than out there for computer memory. Demand, and profitability, for the kind of DRAM used to feed GPUs and different accelerators in AI data centers is so large that it’s diverting away provide of reminiscence for different makes use of and inflicting costs to skyrocket. In accordance with Counterpoint Research, DRAM costs have risen 80-90 precent to this point this quarter.

The most important AI hardware corporations say they’ve secured their chips out so far as 2028, however that leaves all people else—makers of PCs, client gizmos, and the whole lot else that should quickly retailer a billion bits—scrambling to take care of scarce provide and inflated costs.

How did the electronics business get into this mess, and extra importantly, how will it get out? IEEE Spectrum requested economists and reminiscence consultants to clarify. They are saying right this moment’s state of affairs is the results of a collision between the DRAM business’s historic growth and bust cycle and an AI {hardware} infrastructure build-out that’s with out precedent in its scale. And, barring some main collapse within the AI sector, it can take years for brand spanking new capability and new know-how to convey provide according to demand. Costs may keep excessive even then.

To know each ends of the story, you must know the principle perpetrator within the provide and demand swing, high-bandwidth reminiscence, or HBM.

What’s HBM?

HBM is the DRAM business’s try and short-circuit the slowing tempo of Moore’s Legislation by utilizing 3D chip packaging know-how. Every HBM chip is made up of as many as 12 thinned-down DRAM chips referred to as dies. Every die comprises various vertical connections referred to as by silicon vias (TSVs). The dies are piled atop one another and linked by arrays of microscopic solder balls aligned to the TSVs. This DRAM tower—effectively, at about 750 micrometers thick, it’s extra of a brutalist office-block than a tower—is then stacked atop what’s referred to as the bottom die, which shuttles bits between the reminiscence dies and the processor.

This complicated piece of know-how is then set inside a millimeter of a GPU or different AI accelerator, to which it’s linked by as many as 2,048 micrometer-scale connections. HBMs are hooked up on two sides of the processor, and the GPU and reminiscence are packaged collectively as a single unit.

The concept behind such a good, highly-connected squeeze with the GPU is to knock down what’s referred to as the memory wall. That’s the barrier in vitality and time of bringing the terabytes per second of information wanted to run large language models into the GPU. Memory bandwidth is a key limiter to how briskly LLMs can run.

As a know-how, HBM has been round for more than 10 years, and DRAM makers have been busy boosting its functionality.

As the scale of AI models has grown, so has HBM’s significance to the GPU. However that’s come at a price. SemiAnalysis estimates that HBM typically prices 3 times as a lot as different varieties of reminiscence and constitutes 50 % or extra of the price of the packaged GPU.

Origins of the memory chip scarcity

Reminiscence and storage business watchers agree that DRAM is a extremely cyclical business with large booms and devastating busts. With new fabs costing US $15 billion or extra, companies are extraordinarily reluctant to develop and should solely have the money to take action throughout growth instances, explains Thomas Coughlin, a storage and reminiscence skilled and president of Coughlin Associates. However constructing such a fab and getting it up and working can take 18 months or extra, virtually guaranteeing that new capability arrives effectively previous the preliminary surge in demand, flooding the market and miserable costs.

The origins of right this moment’s cycle, says Coughlin, go all the best way again to the chip supply panic surrounding the COVID-19 pandemic . To keep away from supply-chain stumbles and help the speedy shift to remote work, hyperscalers—knowledge middle giants like Amazon, Google, and Microsoft—purchased up large inventories of reminiscence and storage, boosting costs, he notes.

However then provide turned extra common and knowledge middle growth fell off in 2022, inflicting reminiscence and storage costs to plummet. This recession continued into 2023, and even resulted in large reminiscence and storage corporations akin to Samsung slicing manufacturing by 50 % to attempt to maintain costs from going beneath the prices of producing, says Coughlin. It was a uncommon and pretty determined transfer, as a result of corporations usually should run crops at full capability simply to earn again their worth.

After a restoration started in late 2023, “all of the reminiscence and storage corporations have been very cautious of accelerating their manufacturing capability once more,” says Coughlin. “Thus there was little or no funding in new manufacturing capability in 2024 and thru most of 2025.”

The AI knowledge middle growth

That lack of latest funding is colliding headlong with an enormous enhance in demand from new knowledge facilities. Globally, there are nearly 2,000 new data centers both deliberate or underneath development proper now, in accordance with Information Middle Map. In the event that they’re all constructed, it could signify a 20 % leap within the international provide, which stands at round 9,000 services now.

If the present build-out continues at tempo, McKinsey predicts corporations will spend $7 trillion by 2030, with the majority of that—$5.2 trillion—going to AI-focused knowledge facilities. Of that chunk, $3.3 billion will go towards servers, data storage, and community gear, the agency predicts.

The largest beneficiary to this point of the AI knowledge middle growth is definitely GPU-maker Nvidia. Income for its knowledge middle enterprise went from barely a billion in the final quarter of 2019 to $51 billion in the quarter that ended in October 2025. Over this era, its server GPUs have demanded not simply an increasing number of gigabytes of DRAM however an rising variety of DRAM chips. The not too long ago launched B300 makes use of eight HBM chips, every of which is a stack of 12 DRAM dies. Opponents’ use of HBM has largely mirrored Nvidia’s. AMD’s MI350 GPU, for instance, additionally makes use of eight, 12-die chips.

With a lot demand, an rising fraction of the income for DRAM makers comes from HBM. Micron—the quantity three producer behind SK Hynix and Samsung—reported that HBM and other cloud-related memory went from being 17 % of its DRAM income in 2023 to just about 50 % in 2025.

Micron predicts the entire marketplace for HBM will develop from $35 billion in 2025 to $100 billion by 2028—a determine bigger than your entire DRAM market in 2024, CEO Sanjay Mehrotra told analysts in December. It’s reaching that determine two years sooner than Micron had beforehand anticipated. Throughout the business, demand will outstrip provide “considerably… for the foreseeable future,” he mentioned.

Future DRAM provide and know-how

“There are two methods to handle provide points with DRAM: with innovation or with constructing extra fabs,” explains Mina Kim, an economist with the Mkecon Insights. “As DRAM scaling has change into tougher, the business has turned to superior packaging… which is simply utilizing extra DRAM.”

Micron, Samsung, and SK Hynix mixed make up the overwhelming majority of the reminiscence and storage markets, and all three have new fabs and services within the works. Nevertheless, these are unlikely to contribute meaningfully to bringing down costs.

Micron is within the technique of building an HBM fab in Singapore that must be in manufacturing in 2027. And it’s retooling a fab it bought from PSMC in Taiwan that can start manufacturing within the second half of 2027. Final month, Micron broke ground on what might be a DRAM fab complicated in Onondaga County, N.Y. It won’t be in full manufacturing till 2030.

Samsung plans to start producing at a brand new plant in Pyeongtaek, South Korea in 2028.

SK Hynix is constructing HBM and packaging services in West Lafayette, Indiana set to start manufacturing by the tip of 2028, and an HBM fab it’s building in Cheongju must be full in 2027.

Talking of his sense of the DRAM market, Intel CEO Lip-Bu Tan advised attendees on the Cisco AI Summit final week: “There’s no reduction till 2028.”

With these expansions unable to contribute for a number of years, different components might be wanted to extend provide. “Reduction will come from a mix of incremental capability expansions by present DRAM leaders, yield enhancements in advanced packaging, and a broader diversification of provide chains,” says Shawn DuBravac , chief economist for the Global Electronics Association (previously the IPC). “New fabs will assist on the margin, however the sooner positive factors will come from course of studying, higher [DRAM] stacking effectivity, and tighter coordination between reminiscence suppliers and AI chip designers.”

So, will costs come down as soon as a few of these new crops come on line? Don’t guess on it. “Typically, economists discover that costs come down way more slowly and reluctantly than they go up. DRAM right this moment is unlikely to be an exception to this basic remark, particularly given the insatiable demand for compute,” says Kim.

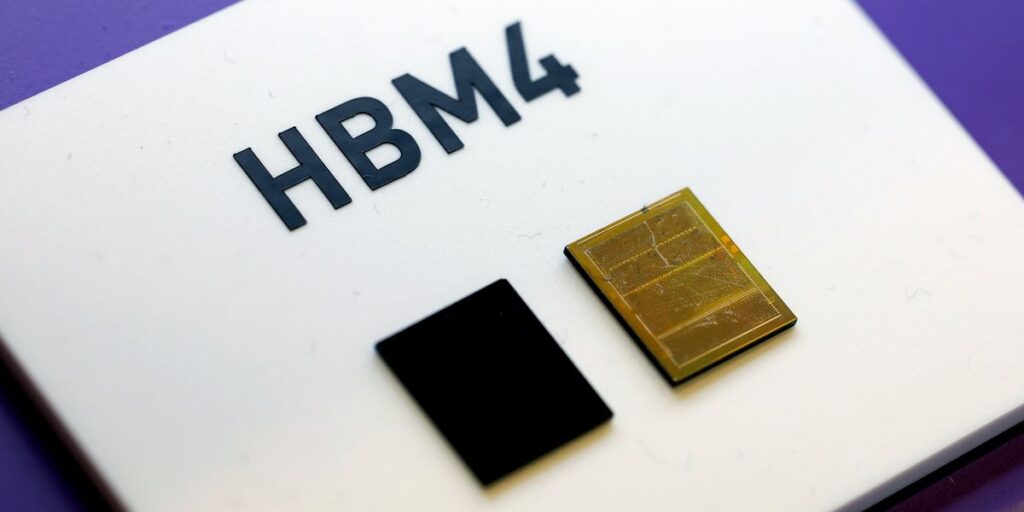

Within the meantime, applied sciences are within the works that would make HBM a fair larger client of silicon. The usual for HBM4 can accommodate 16 stacked DRAM dies, despite the fact that right this moment’s chips solely use 12 dies. Attending to 16 has rather a lot to do with the chip stacking know-how. Conducting warmth by the HBM “layer cake” of silicon, solder, and help materials is a key limiter to going increased and in repositioning HBM inside the package to get much more bandwidth.

SK Hynix claims a warmth conduction benefit by a producing course of referred to as superior MR-MUF (mass reflow molded underfill). Additional out, an alternate chip stacking know-how referred to as hybrid bonding might assist warmth conduction by decreasing the die-to-die vertical distance basically to zero. In 2024, researchers at Samsung proved they might produce a 16-high stack with hybrid bonding, they usually instructed that 20 dies was not out of reach.

From Your Website Articles

Associated Articles Across the Net